- Published on

The Imperative of Measuring Energy Consumption in the Cloud: When and Why to Begin

- Authors

- Name

- Leonard Vincent Simon Pahlke

This is the first of three blogs in the series "Unlock Energy Consumption in the Cloud with eBPF".

In our pursuit of building good software, we must be aware of many quality factors that make software engineering challenging (and fun). These factors include whether the software is maintainable after development, whether it is extendable and can adapt to changing requirements, whether it is portable and can be deployed to different infrastructures, and whether it is secure and reliable. There are numerous other nuances to consider, and you can deep dive into each of them for even more depth. As software engineers, we strive to navigate these requirements towards achieving building good software. Although energy efficiency (among other metrics around resource consumption) is for the most part not commonly recognized as a quality requirement, it should become one for a simple reason. Software significantly influences how our societies are built, and this influence is only set to increase as the role of software across industries and societies continues to expand. So you can say that with sustainable software engineering, we try to balance the virtual world we build with the real world we have. As we become more reliant on software, we need to start valuing natural resources and engineer not just towards performance, user experience and security but also for environmental sustainability and with that for energy efficiency.

In the hardware sector, we observe generation-to-generation efficiency improvements that also reduce overall energy consumption. By transitioning to increasingly smaller nanometer chip architectures, we can achieve more computing power with the same amount of energy (usually). However, we are approaching a physical limit, and it is evident that Moore's Law is beginning to plateau. Simultaneously, software is not becoming more resource-efficient; instead, it is becoming, for the most part, more bloated. This issue was recognized by software engineers over 30 years ago. An interesting article published in 1995 highlighted this concern:

About 25 years ago, a [...] text editor could be developed with only 8,000 bytes of memory. (Modern program editors require 100 times as much!) [...] Has all this bloated software become faster? Quite the opposite. If the hardware wasn't a thousand times faster, modern software would be completely useless [source].

In the article they mentioned among the reasons behind this trend is inflationary feature management, time pressure, and the complexity of tasks. You may think that this article is quite old in the fast-moving software space, however, I think the observation is still true today. Not in every aspect for sure, if the hardware offers more resources to be used, naturally software will be developed to make the best out of it. This is not the criticism, the criticism is about pointing out a trend where software grows without good reason. It's a matter of setting requirements for resources. If we do not measure energy consumption and the use of natural resources, software will remain misaligned with environmental sustainability goals. Without knowledge: data: metrics; we will not detect flaws in current tools and practices. To recognize new requirements, we first need to build a solid understanding and then have a tangible indicator that we can develop against. We are engineers, after all, right.

Examples – Exposing Bad Energy Efficiency

Not looking into energy as a metric, developing software leads to the emergence of technologies that exert a considerable negative impact on resource usage and energy consumption. To me, energy and resource consumption of software is an afterthought, if done well, a nice aspect of software design to highlight on the website for marketing.

Recent examples showcasing high-energy demands are around LLMs (large language models) [source], cryptocurrencies, and blockchain technologies [source]. But, this issue does also extend on a smaller scale to everyday applications that are bloated with features. Innovative technologies should include energy efficiency and care about resources used to be actually useful for a wide audience. Software has a maturity curve, and it is expected that technologies will be less efficient in the first year of release than they will be years later. However, this does not justify releasing software or offering services to a broad audience that in turn consumes a gigantic number of resources. It is not that simple.

[SIDE NOTE] Researchers estimated that creating GPT-3, which has 175 billion parameters, consumed 1,287 megawatt hours of electricity and generated 552 tons of carbon dioxide equivalent, the equivalent of 123 gasoline-powered passenger vehicles driven for one year. And that’s just for getting the model ready to launch, before any consumers start using it [source].

AI, ML, LLMs as well as tech around blockchain seems to me like a public experiment, which should lead to better access to knowledge (AI things) or better autonomy and privacy (blockchain). Comparing the immediate as well as potential benefits against the negatives (environment concerns et al.) is not clear to tell right away. There is a short paper about the upsides and downsides of LLMs [source]. I will leave this as an unfinished discussion open and move on!

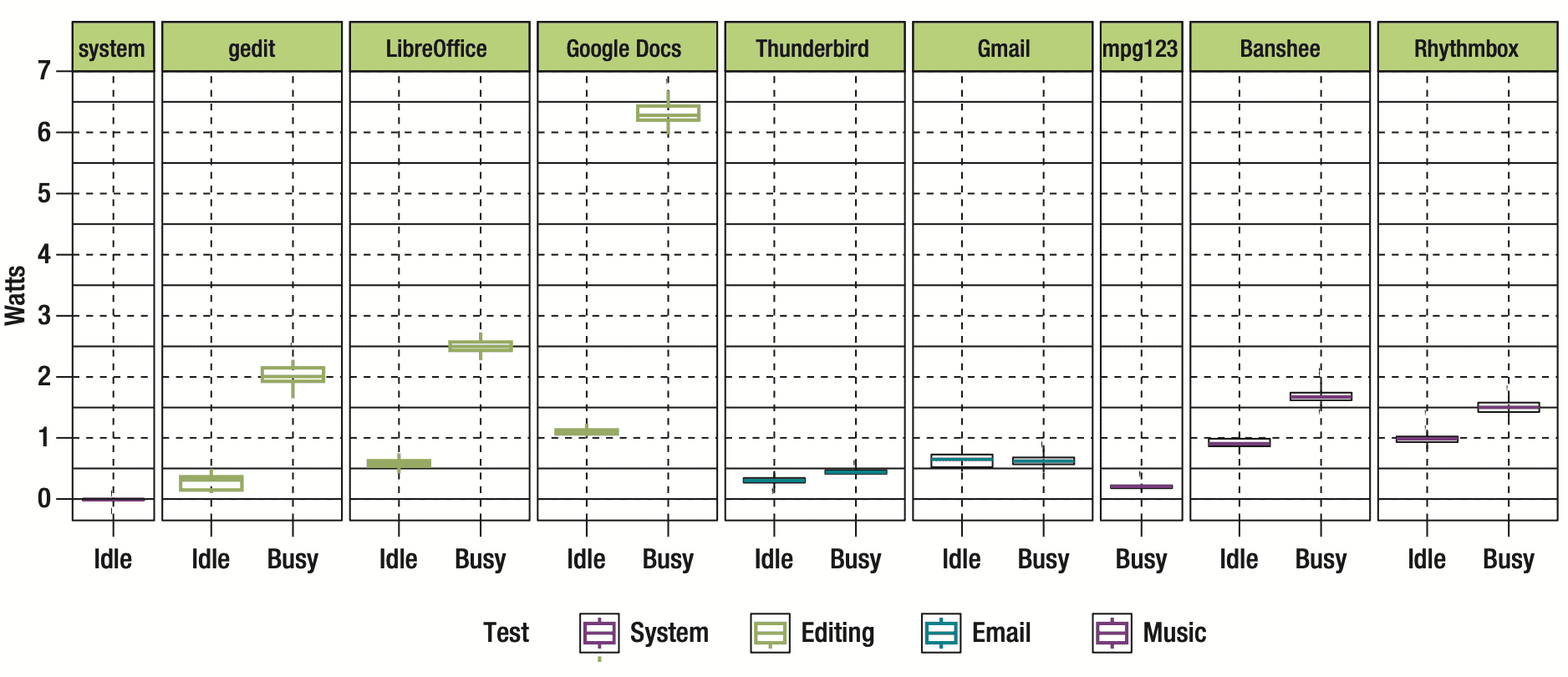

In an older study from 2014 looked into energy usage of various day to day software like, Google Docs. There is a nice visualization about the energy footprint which shows a big difference between Google Docs and a similar program, LibreOffice. A detailed analysis of its energy consumption profile of Google Docs indicates that specific functionalities, especially those related to document synchronization with cloud services and spell checking, significantly increase energy consumption due to the increased event rate with each keystroke [source]. Despite Google Docs being a well-engineered and highly useful tool, it exemplifies augmented resource utilization relative to similar products designed earlier. There is a good chance the energy footprint of Google Docs, which is just used as an example here, has improved. But we don't know if we do not measure it.

So, the learning is firstly, the importance of actively assessing resource usage, as it often remains unnoticed until scrutinized; and secondly, users of products like Google Docs are unable to decide between products if resources used are invisible to them. Perhaps the cloud synchronization features of Google Docs that lead to increased energy consumption are worth the extra resource consumption for your usecase - maybe - maybe not - the user cannot tell. Following this thought further, there is an absence of options for users to opt-in and out of certain features. The cost of using more resources is lost due to the invisible factor of software.

[SIDE NOTE] The invisibility factor of software is one of the challenges in ethical software engineering [source], and you may argue to place environmental software engineering into this field. The invisibility factor also plays a large role in realizing and overcoming the challenges of energy and the resource footprint of software in the cloud.

Cloud & Energy

In the cloud, we have a shared responsibility for our system due to the nature of distributed systems and service offerings. We run software with the confidence of service-level agreements (SLAs) on other datacenters. The security of your systems in the cloud is not just in your hands, but it also relies on the service infrastructure and hardware infrastructure of the ones managing the cloud offering for you. This is mostly known in the security context, but it is also relevant, when we talk about energy and sustainability, since we require access and knowledge about the infrastructure and APIs to make statements. Cloud providers are taking steps (very tiny, tiny or small ones) to provide their customers transparency of resources used. With open source and cloud native projects, it is becoming easier to maintain your own service offering and be digital sovereignty. If you don't maintain your datacenter, information needed to assess energy consumption and resource consumption is invisible and virtualized away from you.

Cloud providers either need to expose detailed information about their infrastructure and expose metrics about hardware components and energy consumption. But it is unlikely that this will happen soon or at all because cloud providers need to put in a lot of effort to get this information, it has security implications, price negotiation implications, and it also begs the question of how cloud providers should prioritize sustainability vs. advancements in technology. Measuring your resource consumption is therefore more challenging. Users have two options: either move away and start owning their hardware, or you keep your systems where they are and try to approximate as best you can without ever getting real numbers or taking steps to improve technical environmental sustainability. Either way, if you start looking into green software, energy consumption of your software you will likely encounter ways that are based on correlations. Let’s take a look.

Relying on correlations like performance or cost ($) as a key metrics does not suffice

As we will explore in the next article of this series, energy consumption of software is always based on assumptions and close approximations if there are no hardware meters to support it. In that light, using correlations is a good starting point to build a strategy if no hardware support is given. It is important to keep in mind that a correlation will stay a correlation and does not imply causation. If we try to solve this problem just with software, cost ($) and performance look like good metrics we can correlate from. But that is just at its first glance. There are plenty of reasons why these two metrics are not sufficient in the immediate, mid and long-term.

Firstly, cost ($) and performance are metrics that are relevant today and always were, but we have built wasteful software regardless. These metrics have not prevented that from happening.

Cases of performance as indicator for energy consumption:

| Performance / Energy | Low-Energy Consumption | High-Energy Consumption |

|---|---|---|

| Poor Performance | Using TCP in scenarios where UDP is better suited, leading to unnecessary overhead and slower data transmission, without necessarily increasing energy consumption. Or a scenario as simple as unnecessary long sleep times between processing packages. | Running an inefficient algorithm that requires excessive computation time and resources, like a poorly optimized regex or database query on large datasets. |

| Good Performance | The Linux Kernel is optimized for performance across a wide range of devices from servers to mobile phones, ensuring efficient use of system resources. It includes energy management features, like CPU frequency scaling (allowing the CPU to run at lower speeds when full power isn't needed), and system sleep states, which significantly reduce energy consumption while maintaining responsiveness and performance. | The process of mining cryptocurrencies like Bitcoin involves solving complex cryptographic puzzles to validate transactions and secure the network. This requires specialized hardware such as ASICs (Application-Specific Integrated Circuits) or GPUs (Graphics Processing Units) that consume a large amount of electricity to perform intensive computational work continuously. |

You can do the same for money cost ($) as an indicator for energy consumption:

| $ / Energy Usage | Low-Energy Consumption | High-Energy Consumption |

|---|---|---|

| Low Cost | Using shared or low-performance cloud instances for non-critical, background tasks. These services cost less and can be energy efficient due to their lower computational energy and shared nature. | Running older, depreciated hardware or opting for cheaper, non-energy-efficient cloud services. The lower cost can be misleading because these options may consume more energy due to less efficient operations or older technology. |

| High Cost | Investing in energy-efficient hardware or cloud services that reduce overall energy usage but come at a higher upfront cost, such as high-efficiency servers or renewable energy sources. | Utilizing large-scale, high-performance computing resources for complex simulations or data analysis. While these resources accelerate computation, their operational expenses and energy consumption are both high. |

By these examples, it’s clear that we cannot have high confidence assessing the energy consumption based on performance or cost ($) as parameters.

We will explore in the next chapter how we can get better metrics right now about the energy consumption of our system. We will do that my moving through the abstraction layers, exploring how these layers are used together and where responsibilities are.